Starter Guide for Beginners - Step03 IMPORTANT SEO COMPONENTS - ARTICLE GATE

3 IMPORTANT SEO COMPONENTS

Technical SEO

The technical SEO guide covers the optimization process for the crawling and indexing phase of the site. With technical SEO optimization, you can help you reach search engines, crawl efficiently, see site errors and index your website without any problems. You will be able to access topics related to crawl budget, sitemap, robots.txt, http status codes, ssl certificate, page title and meta description tags, headings, hreflang language tags, image optimization and structuring your data.

Now let's answer the question of what is technical SEO by dividing it into sub-categories:

Crawl Budget

Crawl budget shows how many times Google visits any page in a given time period based on the importance it places on it. Google sets a different crawl budget for each site.

There are many factors that determine your crawl budget. Optimizing them well will increase your score, multiplying the value of your website in Google's eyes and taking you to the top.

You can increase your crawl budget by the number of pages indexed, the quality of the content on the pages, site speed, in-site links and guiding the bots correctly, optimizing the pages with error codes and duplicate content, SEO-friendly URL structure and preventing unnecessary pages from being scanned.

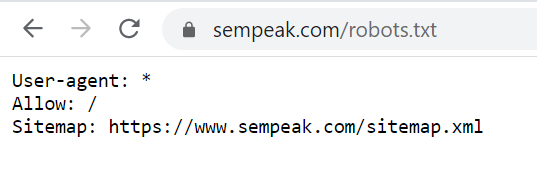

Sitemap (Sitemap)

If we ask what is a sitemap: search engines use the XML sitemap as a guide when crawling a website. A Sitemap is an XML file that lists the indexed pages on your website and is critical.

What should we pay attention to when creating a sitemap:

- Make sure that all indexed pages on your website are included in your sitemap.

- Your sitemap should be dynamic. Your redirected and error code-returning pages should be automatically removed. Your new pages should also be added automatically.

- Your sitemap should be uploaded to Google Search Console and specified as a line in the Robots.txt file.

Important Tip: All URLs in your sitemap must be opened with a “200 status code”, indexed pages.

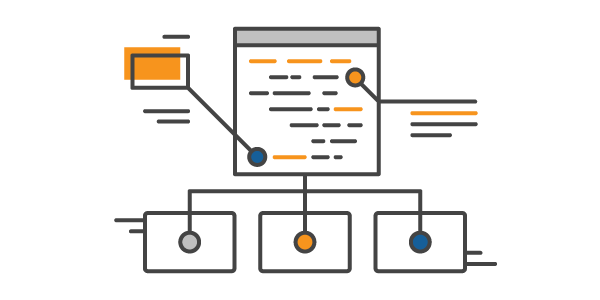

Robots.txt File (What is Robots.txt and How to Create it)

Robots.txt File (What is Robots.txt and How to Create it)

Robots.txt: It is an indexed root file that guides search engine bots and allows you to issue commands related to your site. This ensures that bots don't waste time crawling SEO-worthy pages or admin pages on the website. In other words, by drawing the boundaries of your site, we show how search engine bots cannot crawl your website.

In the robots.txt file, you can specify pages that you don't want "crawled".

Note: You should be careful that the pages you block do not damage the pages with high organic traffic.

Important Tip: Since newly opened websites are under development, you can edit your Robots.txt file to prevent it from being indexed by Google.

Robots.txt usage and testing tool In our article, you can learn how to create the Robots.txt file.

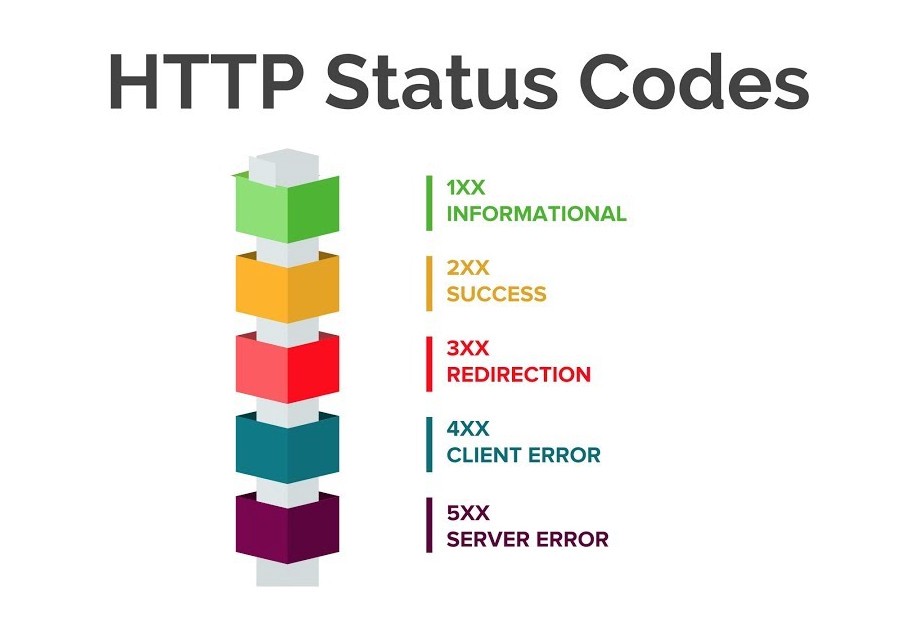

HTTP Status Codes

Status codes warn us of errors or situations that we encounter while browsing a website. One of the sine qua non of proper technical SEO work is to properly manage and optimize these status codes.

Let's examine the definitions of important status codes that we use frequently in SEO consultancy:

200 Status Code (OK)

A 200 code indicates that everything is working properly and there is no problem.

301 Status Code (Permanent Redirect)

Indicates that a page is “permanently” redirected to another page.

302 Status Code (Temporary Redirect)

Indicates that a page is "temporarily" redirected to another page.

401 Code (Authorized Request for Encrypted Pages)

It is the code encountered as a result of an unauthorized user trying to enter a section that must be entered with a password. Not exactly an error code.

Error Code 403 (Access Denied Code)

This is the error code encountered after users try to log in to pages or folders that have access denied. These sections are secured with password protection and bots and visitors cannot access these areas when this code is applied.

404 Error Code (404 Error and Solution)

The most common error code, 404 error, occurs when a URL is deleted or the page is deprecated. We can optimize 404 pages by activating or redirecting again.

500 Error Code (Server Connection Error - Server Error)

It is directly related to the server. In this case, it is necessary to contact the server side or the software team.

HTTPS Version (What is SSL Certificate?)

When you install an SSL on your server, your website can be accessed using https, not http. This indicates that the information transferred between your website and your server (usernames, passwords, personal data, etc.) is encrypted and proves to search engines that it is secure.

The most important factor in the internet world is security. By following this trend, Google also causes ranking losses to show the sites that do not have SSL Certificate (security certificate) less to the user.

If your site is HTTP, it should immediately switch to the HTTPS version by purchasing SSL.

Meta Tags

Tags, one of the most important parts of professional SEO work, inform both search engine bots and users about your site, provide ease of navigation and increase your site's score in Google's eyes.

Page Title

It should be short and informative about the content of your page. It should be unique and different, not exceeding 50-60 characters, with keyword focus (not so much as to be perceived as spam).

Meta Description

Again, it is a summary description between 140-160 characters about the page content. It should be as unique as possible and should be written separately for each URL. Using it as a copy on more than one page will cause you to fall back in Google rankings.

H1 - H6 (Heading - Head Tags)

It is used to display the content on your page in a neat hierarchy and promote it to bots. While the header is written with the H1 tag, the subheadings should be created in a suitable order with H2, H3 and other heading tags.

Image Alt tag

It is the tag by which your images are promoted to search engine bots. That is, it is used to help bots crawling your site understand what is contained in the images you use.

Example: You should create an alt tag for each image by choosing the most appropriate tag for your content, such as "red racing car" for a car image's alt tag.

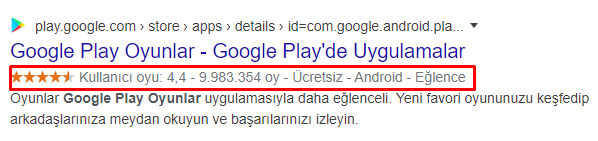

Structured Data Markup

Structured data plays a key role in describing your site's structure and content to Google bots, directing a Google search to the most relevant site. We can also call it speaking the language that search engines understand.

If you want to create an attractive website for the user by offering rich and detailed search formats, you have to structure your data.

Schema.org You can structure your data and move up in the rankings. Google, Bing, Yandex and Yahoo! schema.org, which was established with the cooperation of the company, was created to better explain the HTML codes to be scanned.

Important Tip: With structured data, you can attract more users by creating a “Rich Snippet”.

Language Targeting (What is Hreflang Language Tag?)

It is an HTML code that contains the country and language code you want to target. With the language code you submit to Google, you indicate which language your website is in. It should be used to extract a website content in the language users are looking for. In addition, in multilingual sites, by specifying the equivalent of the same page in a different language with the hreflang tag, we indicate that the content between two or more pages is the same, only the language is different.

Important Tip: A language tag must be entered for each URL. Having it only on the homepage is an incomplete use.

Breadcrumb Structure

The breadcrumb navigation structure is a form of site navigation that shows visitors where they are in the page hierarchy of the website. Websites with a large number of categories can simplify the user experience by using the breadcrumb structure.

It usually appears horizontally near the top of the web page and provides backlinks to every previous page the user navigated to reach the page they were on.

SEO Content Strategy (Content SEO)

SEO-friendly content is keyword-optimized content designed to increase your ranking in search engines. With a properly written SEO content, you can attract your target audience to your website and climb to the top. In short, we can define search engine optimization and content marketing as a whole. With the Google Panda algorithm, content strategy for websites has gained a lot of importance. With each update, the importance it attaches to content increases.

To write an SEO compatible article, you should pay attention to the following;

- No duplicate content, as original as possible (most importantly)

- A keyword-focused title should be added.

- It should be supported by visual

- It should be descriptive and readable

- Semantic words should be used to support the keyword.

- Heading tags should be used properly (h1,h2,h3)

- You can research competitor sites and blogs (for ideas)

- Make sure it is not boring and long or incomplete and short.

Important Tip: You can get keyword ideas about topics by browsing sites such as Wikipedia, Reddit.

Backlink Management

Link referrals to your website from another site are called backlinks. Thus, the awareness of your site increases with references from other sites and its value increases in the eyes of Google bots.

What Should We Consider When Buying Backlinks?

- Backlinks should be obtained from high-quality sites with high domain value.

- It should be related to the content of your website. EX: It wouldn't make much sense to get a backlink to a website that sells "furniture" from a "car" focused site.

- Considering that the more backlinks, the better, links from spam sites should not be taken.

- Front websites should not be opened

- You can advertise your site in the comment area of the blogs.

- You can use an embedded link through promotional articles

Important Tip: Google checks your content with many resources such as “Penguin Algorithm” and detects those that are not suitable for SEO. That's why you should put your SEO content to the originality test and test it.

No comments